Introduction

Now that I have been using open source libraries and projects for several years now, I feel it’s about time to start contributing to the open source community myself. I am kicking this off with my first open source library. SkiaSharp.Waveform.

What it is?

From the name of it, developers should be able to work it out. For those who aren't developers, it is a code library which makes visualising audio easier using a graphics library called Skia, made by Google.

Why?

In an internal project, one of our designers included a waveform and felt it was a very important UX feature. This led me down my usual route of “that looks cool” to “damn, I have to make this” to “I wonder if there’s a library that already does this?”

I couldn’t find a library that was close enough to our use case. So, with a day dedicated to experimenting, I successfully created a waveform that catered to our purpose.

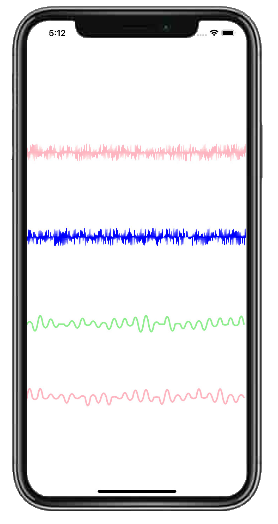

How does it look?

I think it looks pretty, but then again, I did make it... what do you guys think?

How does it work?

This library is for visualisation, more than anything. Right now, all it does is take in some values and display them. It takes an array of values from 0 to 1 (can be higher, but these will be cut off) and draws a line based on these values. Think of it as a rectangle with a horizontal line through the middle. A value of 0 will be drawn on this middle line and a value of 1 will be drawn at either the top or bottom of the rectangle – the waveform will alternate drawing values above or below the middle line.

Is it customisable?

Sure! Customisable properties at runtime include the colour, spacing and offset (useful for moving the waveform as if it was playing back). This should give the user enough basic control over the appearance to suit a variety of use cases and scenarios. We’ve used it for indicating to the user when audio recording is taking place and showing where the user is in a recording during playback – using a higher frequency and wider spacing during recording, then a lower frequency and narrower spacing during playback.

What’s next?

In the future, the library should be able to generate waveforms from a passed in audio file rather than an array of values. This will take more work away from the user making it even easier to use. Adding markers and play heads is high on my list of things to do, as is highlighting sections.

Can I contribute?

Sure! Once I’ve got a CI pipeline set up I’ll be happy to accept pull requests with new features, bug fixes and enhancements, just get in touch.