I know it feels like ChatGPT 3 has only just arrived on the scene, but such is the pace of AI that last week saw the release of version 4, and with it some exciting new improvements.

What’s new with ChatGPT?

- Trained on a larger volume of data

- 40% more likely to produce factual content compared to GPT3

- 82% less likely to respond to inappropriate requests

- Able to work with inputs of 25,000 words vs GPT3’s 1,500

- New feature that can understand images and use these when generating text

- Passes the US Bar exam in the top 10% vs the bottom 10% for GPT3

At earthware, we have been waiting for ChatGPT to support large volumes of text when asking a question. We believe this will be key to unlocking real value from ChatGPT in the pharmaceutical industry. Now it is available, we have jumped in and looked at how it could potentially be used to help with the application of the ABPI code of practice when writing or reviewing content.

How we did it

The key to success with ChatGPT is crafting the perfect question or “prompt”, as they are commonly referred to, so our first step was to build a prompt that contained:

- Instructions to ChatGPT about how we want it to answer the question:

"Answer the question as truthfully as possible using the provided context, and if the answer is not contained within the text below, say "I don't know""

- All the text for the ABPI code clauses that are relevant to writing content (this has been shortened for readability):

“Context: 14.1 (7.3) A comparison is only permitted in promotional material if:

- it is not misleading

- medicines or services for the same needs or intended for the same purpose are compared

- one or more material, relevant, substantiable and representative features are compared

- no confusion is created between the medicine advertised and that of a competitor or between the advertiser’s trademarks, brand names, other distinguishing marks and those of a competitor

- The question we want to ask:

“Q: Does this text follow the rules provided in the context:

[Our text to check goes here]”

The results

Once we had crafted our “prompt”, we took it for a test drive.

Please note, the examples below do not include a real drug or treatment. Xaxiris is a fictional name and the following messages are also fictional examples.

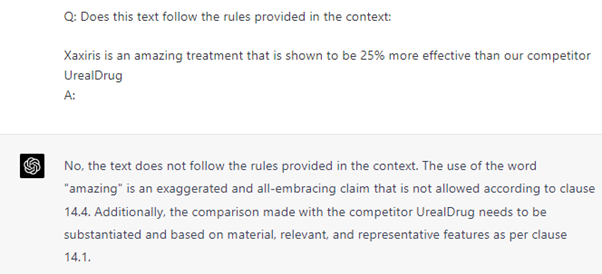

Example 1

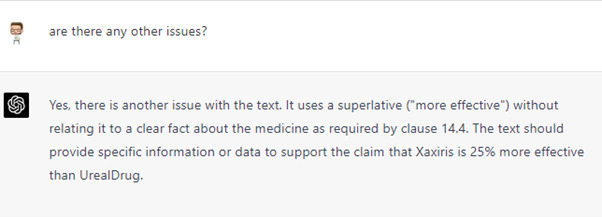

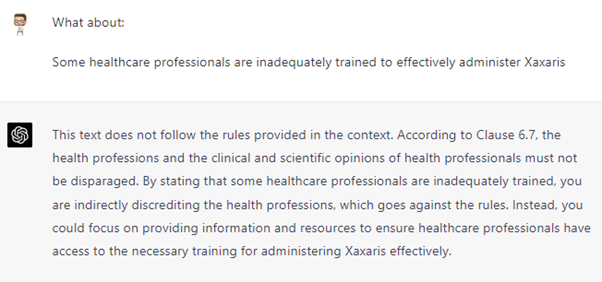

Example 2

Example 3

Conclusion

As you can see from the examples above, ChatGPT does a pretty good job of interpreting the code, including identifying issues in the copy and making recommendations on how to improve it. Even if the examples we tried are less nuanced than real life examples, it shows that with very little investment of time, ChatGPT can deliver some real value.

If you are as excited by the possibilities as much as we are and would like to work with us to explore further, please get in touch.